Challenges of monitoring ML models in production

30 Sep 2020 by dzlab

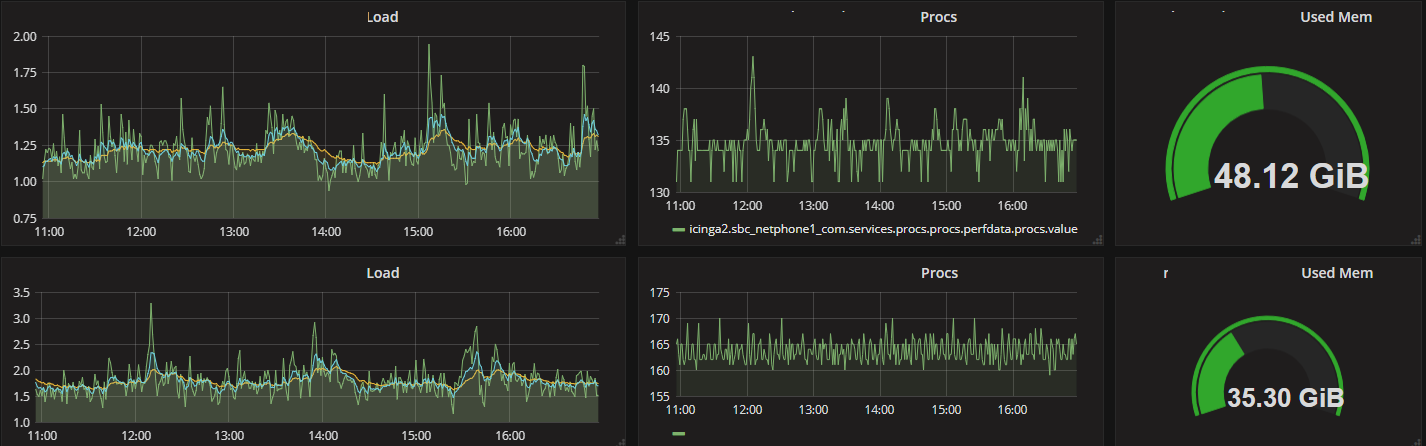

To ensure service continuity and a minimum SLA (Service Level Agreement), traditional applications are deployed along with a monitoring system. Such a system is used to log metrics like request frequency, latency, and server load to take actions like raising alerts in case the service is interrupted.

Similarly, as part of an MLOps paradigm, Machine Learning deployments need to be monitored to keep track of the model’s health and to take actions when performance metrics are degraded. We should not lose track of the fact that trained models come with performance metrics on offline datasets which do not guarantee performance when it goes live. Unfortunately, this task of monitoring models is very challenging as there is a lack of tools, systems, and even a common understanding among the MLOps community of what an ML monitoring system should look like.

However, there are tools that ML practitioners use during training that can be also used during model deployment, for instance, model performance metrics and model explainability techniques. But this is not enough as another dimension that needs to be monitored is the data itself that the model receives to generate predictions. Take as an example, a model which was trained on cat pictures but suddenly during deployment starts getting dog pictures (such problem is called Data drifting). Furthermore, the presence of outliers in the new data can significantly degrade the deployed model performance.

The following sections discuss key challenges of monitoring Machine Learning models in production.

Performance metrics

Labelling the data can be challenging since it is usually a very manual task that requires domain knowledge (e.g. medical images labeling) and as a result time consuming and expensive. But in case the labels can be made available (e.g. a timeseries forcasting task) it is still challenging to use them to calculate the model performance:

- How to handle metrics calculation? Labels have to be fed to a parallel system (e.g. a different endpoint) that that will calculate user-defined metrics, i.e. either standard ML metrics (e.g. accuracy) or domain/business specific ones.

- How to keep Metrics synchronized? Most metrics are stateful, i.e. the calculation requires previous values in addition to the current value, keeping the metrics synchronised at scale is challenging.

- When should a metric be calculated? some metrics are useful when calculated over the lifetime of the model deployment, others can be calculate at a given point in time.

- What threshold to use for a metric? to take actions on the calculated metrics (e.g. raise alert on metric deterioriation) theresholds need to be set and coming up with the right value to limit false alarms can be challenging and requires domain knowledge.

Proxy metrics

Unfortunately, it is not always possible to monitor the model performance on a live environment as this requires access to labels which can be impractical due to its operational or financial cost. In this case, monitoring the statistical characteristics of the model’s input and output data can be used instead as a proxy for monitoring the model performance.

Outlier values

Generalization of ML models is a well known problem that causes the model to perform poorly on unseen data. Outliers in the input data is a serious problem and should be flaged as anomalies. Choosing the right outlier detector for a specific application depends on:

- The modality and dimensionality of the data

- The availability of labeled normal vs outlier data,

Furthermore, the choice of outlier detector has implications on how it will be deployed:

- An offline detector (pre-trained) can be deployed as a separate static ML model

- An online detector have to be updated continuously and thus deployed as a stateful service.

Distribution shift

In contrast to outliers that usually refer to individual instances, data drift or shift detection uses statistical hypothesis test to detect when two samples are drawn from the same underlying distribution. In our case, the drift detector tries to identify when the distribution of the input data to the deployed model starts to diverge from the training data making the model predictions unreliable. One useful application of this is to help decide when the model in production needs to be retrained again.

Drift detectors can be classied in one of the following classes:

- Covariate shift when the input data distribution

p(x)changes while the conditional label distributionp(y|x)does not. - Label shift when the label data distribution

p(y)changes but the conditionalp(x|y)does not.

In case where data is high dimensional (e.g. images) one could attempt a dimensionality reduction step before running the hypothesis test.