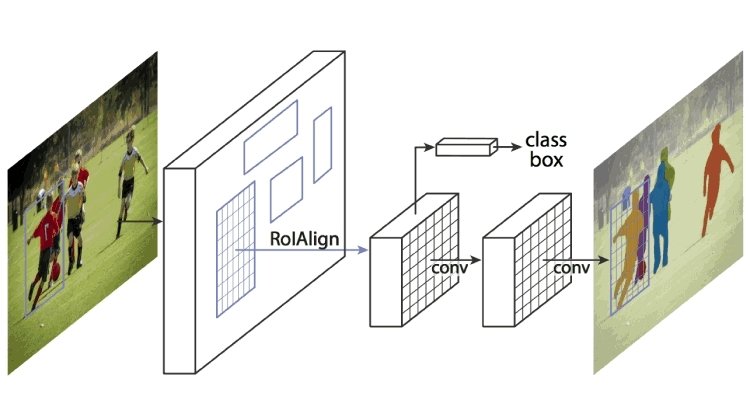

Instance Segmentation is a computer vision task that tries to identify all objects in an image by detecting their bounding boxes and also precisely segment each instance into its correct class. For instance, for an image with many persons, Instance Segmentation will separates each person as a single entity.

One of the very performant models in this task is Mask-RCNN which was built on Faster R-CNN. In addition to outputing predictions of bounding box recognition, Mask-RCNN also predicts object mask in parallel. Furthermore, Mask R-CNN can also be repurposed easily for other taskse.g., allowing us to estimate human poses.

In this article, we will leverage TensorFlow Hub to use an implementation of Mask-RCNN that was pre-trained on the COCO dataset and perform out-of-the-box instance segmentation on custom images.

First, let's install the TensorFlow Object Detection API so we could use its visualization tools to draw the bounding boxes and segmentation masks:

%%capture

%%bash

git clone --depth 1 https://github.com/tensorflow/models

cd models/research/

protoc object_detection/protos/*.proto --python_out=.

cp object_detection/packages/tf2/setup.py .

python -m pip install .

Let's import all needed packages

import glob

from io import BytesIO

import matplotlib.pyplot as plt

import numpy as np

from tqdm import tqdm

import tensorflow as tf

import tensorflow_hub as hub

from PIL import Image

from object_detection.utils import ops

from object_detection.utils import visualization_utils as viz

from object_detection.utils.label_map_util import create_category_index_from_labelmap

%matplotlib inline

Then, we define a helper function load images from disk into a NumPy

def load_image(path):

image_data = tf.io.gfile.GFile(path, 'rb').read()

image = Image.open(BytesIO(image_data))

width, height = image.size

shape = (1, height, width, 3)

image = np.array(image.getdata())

image = image.reshape(shape).astype('uint8')

return image

Next, we define a helper function that takes the predictions of the Mask-RCNN model and extract for each detected instance the bounding box and mask

def process_predictions(predictions, image_height, image_width):

# convert predictions to NumPy

model_output = {k: v.numpy() for k, v in predictions.items()}

# extact masks from predictions

detection_masks = model_output['detection_masks'][0]

detection_masks = tf.convert_to_tensor(detection_masks)

# extact bounding boxes from predictions

detection_boxes = model_output['detection_boxes'][0]

detection_boxes = tf.convert_to_tensor(detection_boxes)

# Reframe box masks into appropriate image masks

detection_masks_reframed = ops.reframe_box_masks_to_image_masks(detection_masks, detection_boxes, image_height, image_width)

detection_masks_reframed = tf.cast(detection_masks_reframed > 0.5, tf.uint8)

model_output['detection_masks_reframed'] = detection_masks_reframed.numpy()

# extract bounding boxes, scores, classes, and masks

boxes = model_output['detection_boxes'][0]

classes = model_output['detection_classes'][0].astype('int')

scores = model_output['detection_scores'][0]

masks = model_output['detection_masks_reframed']

return boxes, classes, scores, masks

We then use the extracted bounding boxes and masks to draw them on the original image

def visualize_predictions(image, boxes, classes, scores, masks, CATEGORY_IDX):

"""Visualize detections and bounding boxes, scores, classes, and masks"""

image_with_mask = image.copy()[0]

viz.visualize_boxes_and_labels_on_image_array(

image=image_with_mask,

boxes=boxes,

classes=classes,

scores=scores,

category_index=CATEGORY_IDX,

use_normalized_coordinates=True,

max_boxes_to_draw=200,

min_score_thresh=0.30,

agnostic_mode=False,

instance_masks=masks,

line_thickness=5

)

return image_with_mask

To be able to map an class ID to a label, we need to load the original mapping from the COCO dataset which is available inte TensorFlow models package

labels_path = 'models/research/object_detection/data/mscoco_label_map.pbtxt'

CATEGORY_IDX = create_category_index_from_labelmap(labels_path)

Now, let's download the Mask-RCNN model and its weights from TF Hub available here https://tfhub.dev/tensorflow/mask_rcnn/inception_resnet_v2_1024x1024/1

MODEL_PATH = 'https://tfhub.dev/tensorflow/mask_rcnn/inception_resnet_v2_1024x1024/1'

mask_rcnn = hub.load(MODEL_PATH)

Get some images for testing

%%bash

mkdir -p images

curl -s -o images/bicycle1.jpg https://cdn.pixabay.com/photo/2016/11/30/12/29/bicycle-1872682_960_720.jpg

curl -s -o images/bicycle2.jpg https://cdn.pixabay.com/photo/2016/11/22/23/49/cyclists-1851269_960_720.jpg

curl -s -o images/animal1.jpg https://cdn.pixabay.com/photo/2014/05/20/21/20/bird-349026_960_720.jpg

curl -s -o images/animal2.jpg https://cdn.pixabay.com/photo/2018/05/27/18/19/sparrows-3434123_960_720.jpg

curl -s -o images/car1.jpg https://cdn.pixabay.com/photo/2016/02/13/13/11/oldtimer-1197800_960_720.jpg

curl -s -o images/car2.jpg https://cdn.pixabay.com/photo/2016/09/11/10/02/renault-juvaquatre-1661009_960_720.jpg

Finally, we iterate over each test image, run it over the Mask-RCNN model and draw the bounding boxes and masks

images = []

for image_path in tqdm(glob.glob('images/*')):

# load image

in_image = load_image(image_path)

# make predictions with Mask-RCNN

predictions = mask_rcnn(in_image)

# process predictions

boxes, classes, scores, masks = process_predictions(predictions, in_image.shape[1], in_image.shape[2])

# visualize boxes and masks

out_image = visualize_predictions(in_image, boxes, classes, scores, masks, CATEGORY_IDX)

images.append(out_image)

Finally, we can display the output images and admire the performance of the Mask-RCNN

figure, axis = plt.subplots(2, 3, figsize=(15, 8))

for index, image in enumerate(images):

row, col = int(index / 3), index % 3

axis[row, col].imshow(image)

axis[row, col].axis('off')

plt.tight_layout()

plt.show()

As you can see from the predicted masks and bounding boxes, the model was able to do a good on our random images.

I hope you enjoyed this article, feel free to leave a comment or reach out on twitter @bachiirc